Support teams spend 60-70% of their time on repetitive questions while complex issues wait. The cost isn't just inefficiency - it's frustrated customers and burned-out agents.

Single-model solutions force a tradeoff between automation and quality. Multi-agent architecture lets each agent be tuned independently - triage for classification, resolution for response quality, and a separate quality gate to catch failures.

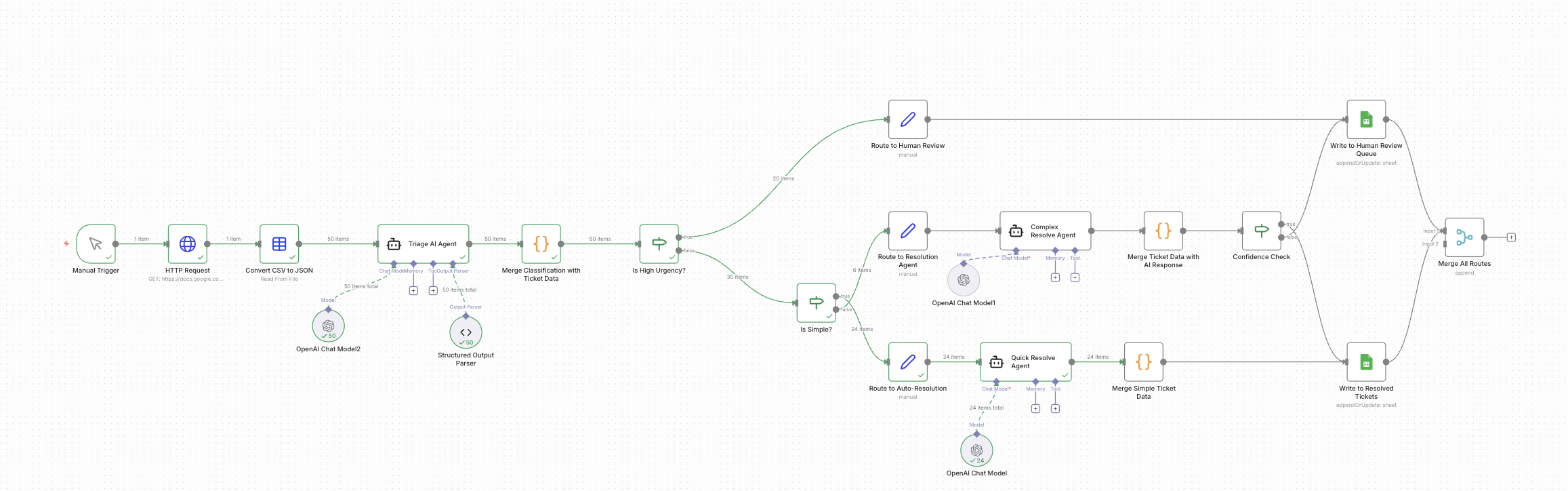

How It Works

Architecture Decisions

| Component | Technology | Purpose |

|---|---|---|

| Orchestration | n8n | Workflow automation connecting all agents and routing logic |

| Triage Agent | Claude Sonnet 4 | Classifies urgency, extracts intent, determines routing path |

| Quick Resolution Agent | GPT-4 + Vector DB | Handles straightforward queries with concise, direct responses |

| Complex Resolution Agent | GPT-4 + Vector DB | Handles technical/nuanced questions with detailed explanations |

| Confidence Check | n8n Rule-based Logic | Evaluates AI response quality before sending to customer |

| Human Routing | n8n | Escalates uncertain or urgent tickets with full context |

| Executive Dashboard | Looker Studio | Tracks metrics, patterns, and system health |

Human-in-the-Loop by Design: The system never sends uncertain responses to customers. When the confidence check detects short responses or explicit uncertainty ("I don't have that information"), it routes to human review with the AI's draft response.

Why Claude for Triage, GPT-4 for Resolution? Claude excels at structured classification. GPT-4's native file search made RAG faster to implement. Right model for each task beats one-size-fits-all.

What I Learned

- 💡 Quality gates prevent bad responses. Every AI response gets checked before reaching customers. Suspiciously short answers or uncertainty phrases ("I don't have that information") automatically route to human review.

- 💡 Escalation with context. Human support teams see classification, urgency, and sentiment upfront. They know immediately if it's an angry billing dispute or technical question.

- 💡 Multi-LLM beats single model. Claude for classification, GPT-4 for resolution. Each excels at its task. Specialized components beat one-size-fits-all.